That‘s why in this article we are going to be taking a look at the process of web-scraping making use of Python programming language.

To be able to proceed with this, you need to have knowledge of the language and HTML.

Before we proceed, it would be good to let you know that web-scraping is not the only way of getting data from websites. Top websites wuch as Google, Spotify, Twitter actually provide APIs, giving the users easy access to their data. However, the number of calls to the API that would be allowed per day is usually limited; except it is a paid service.

Not just that, their are lots of other websites that do not give users the API feature, so we may be left with little or no choice but to do the scraping ourselves.

Before proceeding, it is important to know that some sites find it offensive for people to scrape information from their websites without permission, so you should be careful of what websites you decide to scrape.

In this tutorial, we would be making use of the requests library as well as the BeautifulSoup library. There are other library choices for doing web-scraping apart from BeautifulSoup on python such as Selenium(which is preferred for Quality Assurance testing of websites), Scrapy, Mechanize and a host of others. There is also Urllib, which can serve the same purpose as the requests library.

So let‘s get started.

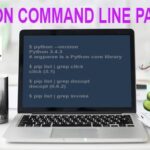

To install the requests library, you can use the pip command:

That should install the requests library.

Then to install the BeautifulSoup library, you can use the pip command as well:

That‘s it. Our library is ready for making our soup………soup of data more like. Let‘s take a look at what we are going to be using the requests library for. The requests library actually isn‘t needed for much, as all we would use it for is to load the contents of the desired webpage.

For every script you write, you would have to import the requests and BeautifulSoup library using:

from bs4 import BeautifulSoup as bs

What this does is to add the requests keyword into the namespace, so that Python understands what the requests keyword is when you make use of it. It also does same to the bs keyword, however it gives us the opportunity to make use of BeautifulSoup using the simple keyword bs.

What the code above does is to get the webpage url and then assigning it to the variable webpage, the url should be a direct string or a string stored in a variable.

Now the content method gets to extract the content from the webpage and then we assign it to the variable webcontent. It is much more complicated than this, but let‘s keep it at this for the sake of simplicity.

That‘s all we would be doing with the requests library. We are simply going to convert the requests object into a BeautifulSoup object.

What this does is to parse the request object, into real readable html. Now we can begin scrapping from the html using the methods available to BeautifulSoup.

In order to understand things better, let‘s work with this snippet of html code.

<div class="content">

<h1>XYZ praised after inspirational comment</h1>

<img src="xyzlady.jpg" alt="lady" align="right" />

ABC spoke emotionally about the incident after her room was cancelled

"It’s why we have DEF," said host who must now attend a course on entrepreneurial studies.

13 July 1997

From the section Technology comments

Related content

Video

ABC account hijackers burgle homes

DEF time-cap ‘unworkable in London’

Video

‘I can’t find a home because of ABC’

</div>

So, BeautifulSoup allows us access content of this html snippet by making use of different functions and using them on the variable htmlcontent.

What this code above does is to look for tags with the name <div></div>, if there are more than one tags with that name it simply returns the first tag it comes across.

So this should return:

What if we want to get all the tags with the name <div></div> and then save them in a list so as to pick the one we need from it? All we would use is the find_all() method.

After running this piece of code, we would have:

<div class="Tech_head">Technology</div>

‘,

<div class="content">

<h1>XYZ praised after inspirational comment</h1>

<img src="xyzlady.jpg" alt="lady" align="right" />

ABC spoke emotionally about the incident after her room was cancelled

"It’s why we have DEF," said host who must now attend a course on entrepreneurial studies.

13 July 1997

From the section Technology comments

Related content

Video

ABC account hijackers burgle homes

DEF time-cap ‘unworkable in London’

Video

‘I can’t find a home because of ABC’

</div>

‘]

Now to get any of the <div> tags we can simply use indexing on the list and extract the needed one. So what if we need to pick a <div></div> tag according to certain attributes? For instance we need a <div class=”Tech_head”></div>, this means that we would want <div> tags that have the class attribute with the value “Tech_head“.

The following code would do:

This would get us the <div class=”Tech_head”></div> tag. If we need the contents of the tag only, without getting the tag itself as a result, we call the .text on it. So:

Would return:

Technology

Instead of:

The last thing we would be looking at would be extracting the value of an attribute in a tag. Taking a look at the code, you would see this tag:

We could very easily extract the value of the src attribute, that would be done with the following code:

This would return the following answer:

In cases where there are lots of <img> tags, and we want to get one by the attribute, we can use the attrs parameter as shown earlier.

There you have it, the find(), find_all() are going to be the most useful tags for you when it comes to web-scraping with BeautifulSoup. However, there some other tricks which you would get to find out as you get to tackle much more difficult websites.